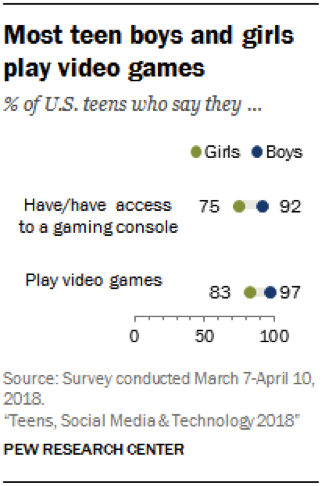

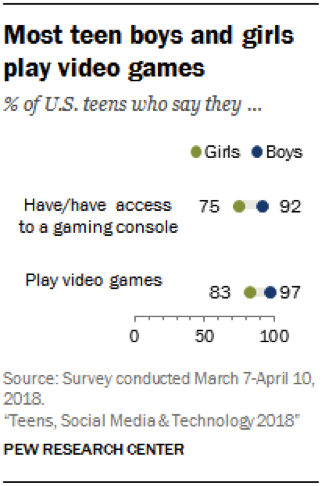

With most teen boys and girls in the US playing video games in 2018, I want to share some key points parents and guardians should know about online moderation and the human and technological approaches behind it. Having spent 10 years helping foster healthier online communities (including six years at Disney Online Studios) and building a background in digital citizenship and online safety, I want to share a bit of my experience with you all. I’m currently the Director of Community Trust & Safety at Two Hat Security. We process 22 billion lines of text every month, as well as images and videos, proactively filtering negative content, and encouraging positive community interactions for the largest gaming companies and social platforms in the world.

The key objective of this article is to help you understand what safety features are available in games. I also hope this information will help foster common ground for conversations you can have with your child around online games.

Online games usually allow users to create their own usernames and chat with other players. In some cases, users can upload a profile picture and interact in forums too. This brings positive aspects in terms of bonds, interactions and friendship in those spaces. However, this also brings challenges. When it comes to user-generated content, there are many issues related to negative and anti-social behaviour, including cyber bullying, cheating, hate speech and more.

But first, what is moderation in the context of an online gaming community?

Moderation of User-Generated Content

Moderation is the discipline of ensuring that user-generated content is appropriate and within the boundaries determined by a platform’s community guidelines and Terms of Use. It can be done by leveraging tools that automate a big part of the process, or exclusively manually by a team of moderators, or a combination of both.

For example, image moderation could involve staff members (known as moderators) reviewing all images posted by players on a forum or profile page to determine if they're appropriate for that platform and demographic, then removing them if needed. Artificial intelligence models can also be used to quickly identify content, then automatically approve or reject images based on community guidelines.

#1 Community Guidelines and Parent Portals

Community guidelines set the tone and expected behaviour of an online space. An example is PopJam, a kid’s social app owned by SuperAwesome, who provides a concise and well crafted community guideline resource for users and parents.

Strong community guidelines reflect the very purpose of a platform and community. Critically, they are the articulation of what that online space stands for.

Tip: Review the community guidelines of a new game your child wants to play. Also, see if they have a portal or section in the website especially for parents. Both Animal Jam and Roblox are two game companies that offer parent portals.

There are other incredibly valuable resources for parents, including Patricia E Vance’s article “Fortnite Battle Royale: Everything Parents Need to Know”.

#2 Chat Moderation

Many platforms have an in-game chat feature, where players communicate in real time, in a group setting, or sometimes privately.

Some game companies use a proactive filter that blocks content like cyber bullying, hate speech, personally identifying information, and sexual talk, all in real time. That’s an ideal first layer of protection.

In some cases, you can find out if a game takes that proactive measure by reading their Terms of Use.

Tip: you can contact their support channels to ask questions about any safety mechanisms they might have. Alternatively, many platforms have pages with Frequently Asked Questions. For example, MovieStarPlanet, a kid’s social network, provides a good instance of safety feature information.

#3 User Reports

Ideally, most damaging, high-risk content is identified and blocked by a filter so players and moderators are not exposed to it in the first place. Many platforms also allow users to report each other for inappropriate chat. This can include chat that an automated system might have missed, or content that requires further context and human review.

Once a report is filed, normally a trained human moderator will review it and determine if the rules were broken. If they were, the offending account might be warned, suspended temporarily or banned permanently depending on the severity and context.

Some games even provide feedback to players, letting them know that their reports resulted in action. Extra points for that!

If your child is ever uncomfortable with what another player is saying in a game, it is a good idea to encourage them to not only bring it up with you, but to report the other player if need be.

#4 Block, Ignore or Mute Features

Games might also offer players an opportunity to “block”, “ignore,” or “mute” someone, which in turn will cause them to stop seeing messages from said user. It’s a good feature that young players should be aware of.

It’s important to remember, though, that the intention is not to put the onus solely on players to react to negative and anti-social behavior. Gaming companies should, and many are, doing their part by designing experiences that are conducive to positive interactions as well as applying proactive techniques like chat filtering. That way, “blocking” or “muting” someone becomes an added safety feature, and not the default option.

#5 Encouraging Positive Behaviour Through Gaming Experiences

Games can also be a fertile ground for encouraging teamwork, productive interactions and sportsmanship.

Tip: Confirm if the game your child is playing was developed by a company that is committed to encouraging positive interactions in the long run. Are they involved with any initiatives around digital citizenship, sportsmanship or online safety?

For example, I'm a co-founder and member of the Fair Play Alliance, a coalition of gaming professionals and companies committed to developing quality games. We provide an open forum for the games industry to collaborate on research and best practices that encourage fair play and healthy communities in online gaming. We envision a world where games are free of harassment, discrimination, and abuse, and where players can express themselves through play.

Safety features and online etiquette as conversation topics

To recap, look for the following resources and features in an online game:

Community Guidelines

Parent Portals

Terms of Use and Support Channels/Frequently Asked Questions (“Is there a word filter in place?” “How do you protect players from cyberbullying?”)

Reporting Tools

Blocking, Ignoring and Muting Tools

Does the game encourage positive behaviour through game design and in-game experiences?

I hope this article provides you with a starting point for conversations about online safety and online etiquette with your children. I encourage you to connect with them around their digital experiences, and to engage with them as citizens in our new digital world!

.svg)